{{item.title}}

{{item.text}}

{{item.text}}

When you use AI to support business-critical decisions based on sensitive data, you need to be sure that you understand what AI is doing, and why. Is it making accurate, bias-aware decisions? Is it violating anyone’s privacy? Can you govern and monitor this powerful technology? Globally, organisations recognise the need for Responsible AI but are at different stages of the journey.

Responsible AI (RAI) is the only way to mitigate AI risks. Now is the time to evaluate your existing practices or create new ones to responsibly and ethically build technology and use data, and be prepared for future regulation. Future payoffs will give early adopters an edge that competitors may never be able to overtake.

PwC’s RAI diagnostic survey can help you evaluate your organisation’s performance relative to your industry peers. The survey takes 5-10 minutes to complete and will generate a score to rank your organisation with actions to consider.

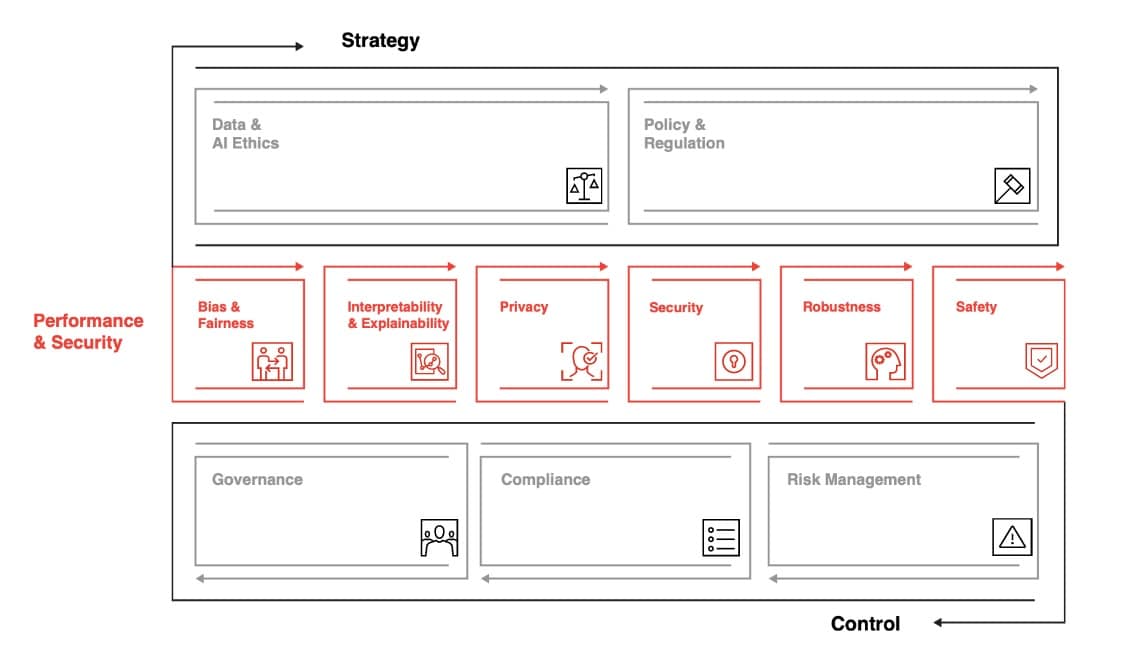

A variety of factors can impact AI risks, changing over time, stakeholders, sectors, use cases, and technology. Below are the six major risk categories for application of AI technology.

AI algorithms that ingest real-world data and preferences as inputs may run a risk of learning and imitating possible biases and prejudices.

Performance risks include:

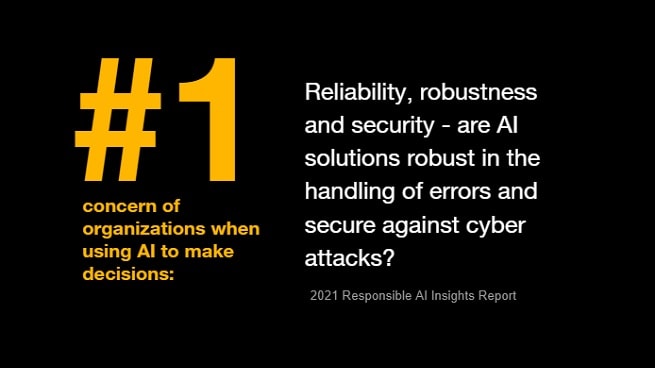

For as long as automated systems have existed, humans have tried to circumvent them. This is no different with AI.

Security risks include:

Similar to any other technology, AI should have organisation-wide oversight with clearly-identified risks and controls.

Control risks include:

The widespread adoption of automation across all areas of the economy may impact jobs and shift demand to different skills.

Economic risks include:

The widespread adoption of complex and autonomous AI systems could result in “echo-chambers” developing between machines, and can have broader impacts on human-human interaction.

Societal risks include:

AI solutions are designed with specific objectives in mind which may compete with overarching organisational and societal values within which they operate. Communities often have long informally agreed to a core set of values for society to operate against. There is a movement to identify sets of values and thereby the ethics to help drive AI systems, but there remains disagreement about what those ethics may mean in practice and how they should be governed. Thus, the above risk categories are also inherently ethical risks as well.

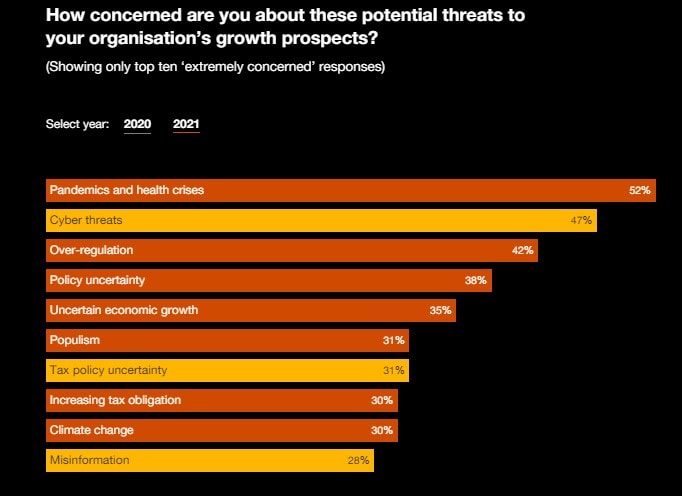

Your stakeholders, including board members, customers, and regulators, will have many questions about your organisation's use of AI and data, from how it’s developed to how it’s governed. You not only need to be ready to provide the answers, you must also demonstrate ongoing governance and regulatory compliance.

Our Responsible AI Toolkit is a suite of customizable frameworks, tools and processes designed to help you harness the power of AI in an ethical and responsible manner - from strategy through execution. With the Responsible AI toolkit, we’ll tailor our solutions to address your organisation’s unique business requirements and AI maturity.

Who is accountable for your AI system? The foundation for Responsible AI is an end-to-end enterprise governance framework, focusing on the risks and controls along your organization’s AI journey—from top to bottom. PwC developed robust governance models that can be tailored to your organisation. The framework enables oversight with clear roles and responsibilities, articulated requirements across three lines of defense, and mechanisms for traceability and ongoing assessment.

Are you anticipating future compliance? Complying with current data protection and privacy regulation and industry standards is just the beginning. We monitor the changing regulatory landscape and identify new compliance needs your organization should be aware of, and support the change management needed to create tailored organizational policies and prepare for future compliance.

How are you identifying risk? You need expansive risk detection and mitigation practices to assess development and deployment at every step of the journey, and address existing and newly identified risks and harms. PwC’s approach to Responsible AI works with existing risk management structures in your organization to identify new capabilities, and supports the development of any necessary operating models.

Is your AI unbiased? Is it fair? An AI system that is exposed to inherent biases of a particular data source is at risk of making decisions that could lead to unfair outcomes for a particular individual or group. Fairness is a social construct with many different and—at times—conflicting definitions. Responsible AI helps your organisation to become more aware of bias and potential bias, and take corrective action to help systems improve in their decision-making.

How was that decision made? An AI system that human users are unable to understand can lead to a “black box” effect, where organisations are limited in their ability to explain and defend business-critical decisions. Our Responsible AI approach can help. We provide services and processes to help you explain both overall decision-making and also individual choices and predictions, and we can tailor to the perspectives of different stakeholders based on their needs and uses.

How will your AI system protect and manage privacy? With PwC’s Responsible AI toolkit, you can identify strategies to lead with privacy considerations and to respond to consumers’ evolving expectations.

What are the security risks and implications that should be managed? Detecting and mitigating system vulnerabilities is critical to maintaining integrity of algorithms and underlying data while preventing the possibility of malicious attacks. The great possibilities of AI come with the need for great protection and risk management. PwC’s approach to Responsible AI includes essential cybersecurity assessments to help you manage effectively.

Will your AI behave as intended? An AI system that does not demonstrate stability, and consistently meets performance requirements, is at increased risk of producing errors and making the wrong decisions. To help make your systems more robust, Responsible AI includes services to help you identify potential weaknesses in models and monitor long-term performance. PwC has developed specific technical tools to support this area.

Is your AI safe for society? AI system safety should be evaluated in terms of potential impact to users, ability to generate reliable and trustworthy outputs, and ability to prevent unintended or harmful actions. PwC’s Responsible AI services enable you to assess safety and societal impact to support this dimension.

Is your data use and AI ethical? Our Ethical data and AI Framework provides guidance and a practical approach to help your organisation with the development and governance of AI and data solutions that are ethical and moral.

As part of this dimension, our framework includes a unique approach to contextualising and applying ethical principles, while identifying and addressing key ethical risks.

Are you positioning your AI toward future compliance? As the regulatory landscape continues to evolve, maintaining compliance and responding to regulatory change will be critical. Leveraging PwC’s approach to Responsible AI can help you identify and evaluate relevant policy, industry standards and regulations that may impact your AI solutions. Operationalize regulatory compliance while factoring in localized differences.

We have the right team to build AI responsibly internally and for our clients, and bring big ideas to life across all stages of AI adoption.

PwC is proud to collaborate with the World Economic Forum to develop forward-thinking and practical guidelines for AI development in use across industries. Learn more here.

Find out by taking our free Responsible AI Diagnostic—drilling down into questions like:

Whether you're just getting started or are getting ready to scale, Responsible AI can help. Drawing on our proven capability in AI innovation and deep global business expertise, we'll assess your end-to-end needs, and design a solution to help you address your unique risks and challenges.

Contact us today. Learn more about how to become an industry leader in the responsible use of AI.

{{item.text}}

{{item.text}}