The $320 billion question: Can you trust your AI?

Artificial intelligence (AI) is a transformational $320 billion opportunity in the Middle East. Yet, as AI becomes more sophisticated, more and more decision making is being performed by an algorithmic ‘black box’. To have confidence in the outcomes, cement stakeholder trust and ultimately capitalise on the opportunities, it may be necessary to know the rationale of how the algorithm arrived at its recommendation or decision – ‘Explainable AI’. Yet opening up the black box is difficult and may not always be essential. So, when should you lift the lid, and how?

There is anxiety in the Middle East about the progress of technology: 75% of CEOs regard “the speed of technological change” as a business threat, second only to “changing consumer behaviour” (79%).

Emerging frontier

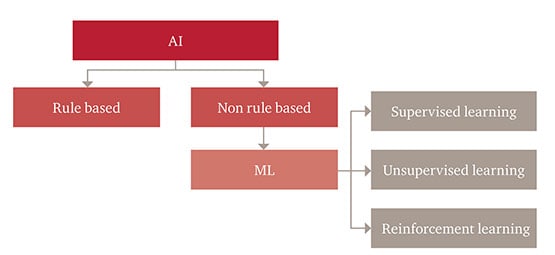

The emerging frontier of AI is Machine Learning (ML). Through it, a variety of ‘unstructured’ data forms including images, spoken language, and the internet (human and corporate ‘digital exhaust’) are being used to inform medical diagnoses, create recommender systems, make investment decisions and help driverless cars see stop signs.

Operating in the dark

The central challenge is that many of the AI applications using ML operate within black boxes, offering little if any discernible insight into how they reach their outcomes.

For relatively benign, high volume, decision making applications such as an online retail recommender system, an opaque, yet accurate algorithm is the commercially optimal approach. However, the use of AI for ‘big ticket’ risk decisions in the finance sector, diagnostic decisions in healthcare and safety critical systems in autonomous vehicles are of high stake for businesses and society, requiring the decision taking AI to explain itself.

Benefits of Explainable AI

There are significant business benefits of building interpretability into AI systems. As well as helping address pressures such as regulation, and adopt good practices around accountability and ethics, there are significant benefits to be gained from being on the front foot and investing in explainability today.

The greater the confidence in the AI, the faster and more widely it can be deployed. Your business will also be a stronger position to foster innovation and move ahead of your competitors in developing and adopting new generation capabilities.

- Optimise

- Retain

- Maintain

- Comply

Optimise

Model performance

One of the keys to maximising performance is understanding the potential weaknesses. The better the understanding of what the models are doing and why they sometimes fail, the easier it is to improve them. Explainability is a powerful tool for detecting flaws in the model and biases in the data which builds trust for all users. It can help verifying predictions, for improving models, and for gaining new insights into the problem at hand. Detecting biases in the model or the dataset is easier when you understand what the model is doing and why it arrives at its predictions.

Decision making

The primary use of machine learning applications in business is automated decision making. However, often we want to use models primarily for analytical insights. For example, you could train a model to predict store sales across a large retail chain using data on location, opening hours, weather, time of year, products carried, outlet size etc. The model would allow you to predict sales across my stores on any given day of the year in a variety of weather conditions. However, by building an explainable model, it’s possible to see what the main drivers of sales are and use this information to boost revenues.

Retain

Control

To move from proof of concept to fully-fledged implementation, you need to be confident that your systems satisfies certain intended requirements, and that they do not have any unwanted behaviours. If the system makes a mistake, organisations need to be able to identify that something is going wrong in order to take corrective action or even to shut down the AI system. XAI can help your organisation retain control over AI by monitoring performance, flagging errors and providing a mechanism to turn the system off. From a data privacy point of view, XAI can help to ensure only permitted data is being used, for an agreed purpose, and make it possible to delete data if required.

Safety

There have been several concerns around safety and security of AI systems, especially as they become more powerful and widespread. This can be traced back from to range of factors including deliberately unethical design, engineering oversights, hacking and the effect of the environment the AI operates in. XAI can help to identify these kinds of faults. It’s also important to work closely with cyber detection and protection teams to guard against hacking and deliberate manipulation of learning and reward systems.

Maintain

Trust

Building trust in artificial intelligence means providing proof to a wide array of stakeholders that the algorithms are making the correct decisions for the right reasons. Explainable algorithms can provide this up to a point, but even with state of the art machine learning evaluation methods and highly interpretable model architectures, the context problem persists: AI is trained on historical datasets which reflect certain implicit assumptions about the way the world works. By gaining an intuitive understanding of a model’s behaviour, the individuals responsible for the model can spot when the model is likely to fail and take the appropriate action. XAI also helps to build trust by strengthening the stability, predictability and repeatability of interpretable models. When stakeholders see a stable set of results, this helps to strengthen confidence over time.

Ethics

It’s important that a moral compass is built into the AI training from the outset and AI behaviour is closely monitored thereafter through XAI evaluation and where appropriate, a formal mechanism that aligns a company’s technology design and development with its ethical values and principles and risk appetite may be necessary.

Comply

Accountability

It’s important to be clear who is accountable for an AI system’s decisions. This in turn demands a clear XAI-enabled understanding of how the system operates, how it makes decisions or recommendations, and how it learns and evolves over time and how to ensure it functions as intended. To assign responsibility for an adverse event caused by AI, a chain of causality from the AI agent back to the person or organisation needs to be established that can be reasonably held responsible for its actions. Depending on the nature of the adverse event, responsibility will sit with different actors within the causal chain that lead to the problem.

Regulation

While AI is lightly regulated at present, this is likely to change as its impact on everyday lives becomes more pervasive. Regulatory bodies and standard institutions are focusing on a number of AI-related areas, with the establishment of standards for governance, accuracy, transparency and explainability being high on the agenda. Further regulatory priorities include safeguarding potentially vulnerable consumers.

Use Case Criticality

Explainable AI works in conjunction with PwC’s overarching framework for the best practice of AI: Responsible AI, which helps organisations deliver on AI in a responsible manner. Upon evaluation of the six main criteria of use case criticality, the framework will recommend for new use cases the optimal set of recommendations at each step of the Responsible AI journey to inform Explainable AI best practice for a given use case. Whilst for existing AI implementations, the outcome of the assessment is a gap analysis showing an organisation’s ability to explain model predictions with the required level of detail compared to PwC leading practice and the readiness of an organisation to deliver on AI (PwC Responsible AI, 2017).

The total of the economic impact of a single prediction, the econmic utility of understanding why a single prediction was made, and the intelligence derived from a global understanding of the process being modeled.

The number of decisions that an AI application has to make e.g. two billion per day versus three per month.

The robustness for the application, it's accuracy and ability to generalise well to unseen data.

The regulation determining the acceptable use and level of functional validation needed for a given AI application.

How the AI application interacts with the business, stakeholders, and society and the extent a given use case could impact business reputation.

The potential harm due to an adverse outcome resulting from the use of the algorithm that goes beyond the immediate consequences and includes the organisational environment: executive, operational, technology, societal (including customers), ethical, and workforce.